|

| ▲ From left, Son Seok-gu of "A Killer Paradox" and the face of a child actor using Deepfake technology (Source: Netflix) |

A story about actor Son Suk- ku's child actor role in the original Netflix series, "A Killer Paradox," which was released on Feb. 9, has become a sensational topic of conversation on social media. Son and the child actor look very similar in appearance. At a meeting with reporters on Feb. 14, director Lee Chang- hee explained, "The child actor acted the role, but we put Son's face over him, by collecting young photos of Son Suck- ku himself and made CG technology with them." The young actor, who was shown on the screen, was a fictional character created by CG processing using Deepfake technology. "Some people asked me if I should do that, but I wanted to make it more realistic," said the director Lee Chang- hee. "I tried this many times because I wanted to utilize this technology since I debuted, but the CG team rejected it because the technology was not working." A Killer Paradox uses Deepfake technology to create past scenes of all the characters as well as the main characters' toys.

Deepfake is a combination of artificial intelligence technology called, "Deep learning," and, "Fake," and is an image synthesis technology that utilizes GAN (Generative Adversarial Networks). It is able to generate the most realistic false information as a result of competing AI models that produce fake information and AI models that detect fake information. "A Killer Paradox," is not the first time Deepfake has been used in entertainment. JTBC's drama, "Welcome to Samdali," which ended on Jan. 21, also used Deepfake technology. It was to revive Song Hae, the national MC of the, "Korean National Singing Contest." It features Cho Yong- pil (Ji Chang- wook) and Jo sam- dal (Shin Hye- sun) appearing at the national singing contest when they were young and being asked, "What do you want to become when you grow up?" This scene is the result of gathering videos from the national singing contest in the 1990s and developing them from AI. JTBC said, "We wanted to share our longing with viewers by recreating him, who remains the eternal national MC in our hearts."

However, there are many voices of opposition to the use of deepfake technology. From July to November last year, the Hollywood Actors and Actors Union went on strike to refuse to make movies, saying that if large production companies use AI- generated actors in making movies, many actors' jobs could be threatened. Concerns have also been raised about the possibility of using digital sex crimes or manipulating public opinion. Foreign media including the New York Times reported on the 26th (local time) that obscene photos of Taylor Swift's face were widely spread on X (formerly Twitter). In January of the same month, on the eve of the New Hampshire primary, a fake phone call from President Biden not to vote for Democratic Party members was made. The automated recording phone sent a message saying, "This Tuesday's vote will only give Republicans an opportunity to re- elect Donald Trump," and caused confusion by imitating President Biden's tone.

In Korea, campaigning using AI- based Deepfake videos has been banned in preparation for the upcoming general election of the National Assembly members in April. However, according to the National Election Commission, there have already been 129 posts that violated the Public Official Election Act by campaigning using Deepfake technology from Jan. 29 to Feb. 16. To address this issue, Naver, Kakao, and SK Communications have adopted a Joint Declaration to Prevent the Use of Malicious Election Deepfake to ensure fairness and reliability in the election process. These companies have decided to make efforts to detect and take prompt action to lower the risk of malicious election Deepfakes, increase transparency by disclosing response policies, and continuously activate information and opinion exchanges to prevent the spread. The World Economic Forum cited false information generated by AI as one of the risk factors facing the world in its Global Risk Report 2024. It is a warning that various forms of false information can cause political or social confusion. As various social changes are expected according to the amazing utilization and influence of Deepfakes, it is time for technical, social, and legal preparations for responsible development and use.

By Kim So-ha, reporter lucky.river16@gmail.com

<저작권자 © The Campus Journal, 무단 전재 및 재배포 금지>

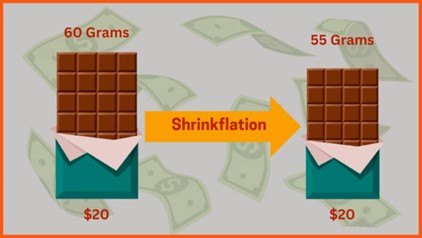

Shrinkflation, Consumer Deception

Shrinkflation, Consumer Deception